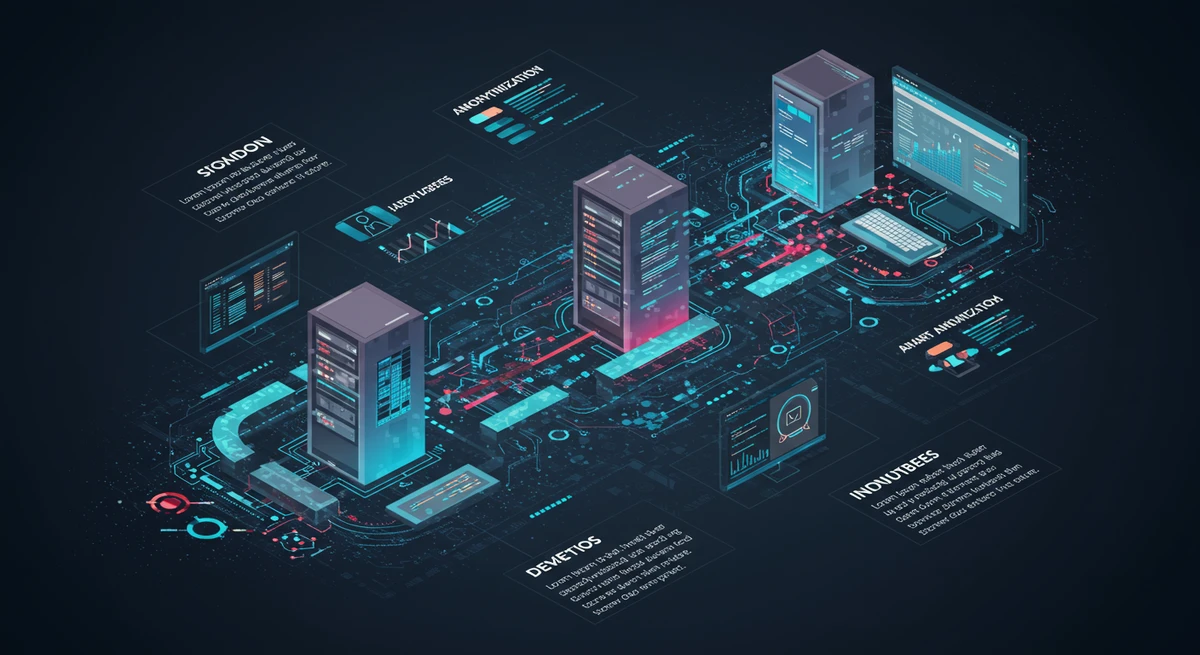

From Bottlenecks to Agility: How Smart Anonymization accelerates DevOps

The client’s challenges

The bank wanted to secure its test environments by anonymizing sensitive customer data. However, the first solution created more problems than it solved:

- Loss of data integrity: datasets were overly truncated and altered, making them unusable for integration and UAT.

- Slower release cycles: critical testing phases had to fall back on production data, creating security risks.

- Higher infrastructure costs: duplicate test environments were maintained, increasing complexity and expenses.

One objective remained: ensuring regulatory compliance without compromising developer agility / project delivery speed.

Our intervention: expertise and approach

Talan Switzerland experts redesigned the anonymization strategy around two key principles:

- Preserve data quality – anonymization techniques were fine-tuned to ensure datasets retained consistency and functional integrity.

- Integrate seamlessly into DevOps – anonymization was automated within the CI/CD pipeline, providing developers with compliant, ready-to-use data.

This agile approach turned anonymization from a bottleneck to a real accelerator.

The results: before vs after

Before our intervention: slow release cycles, inflated costs, and persistent PII risks.

After our solution:

- Faster time-to-data: developers received anonymized datasets automatically, reducing waiting times

No more production data in test: eliminating unnecessary risk exposure

Streamlined collaboration: global teams accessed secure and reliable data, boosting efficiency

Industry analysts such as Gartner call this “time-to-data” — and for this bank, it became a decisive factor in delivering value faster.

A differentiating approach

Our success was based on:

- A business-first view of anonymization, aligning security with development needs

- A collaborative approach with DevOps teams, ensuring adoption and efficiency

- A balanced methodology, avoiding the common trap of “good enough” anonymization that kills usability

Key takeaways

This project shows how anonymization, when embedded into DevOps processes, can remove bottlenecks, accelerate delivery, and reduce risks at the same time.

Contact our experts

Sources

Patrice Ferragut - Jérôme Gransac - Lucas Challamel